Inspiration Station | 2026 Real Good Workshop

Real Good AI just wrapped its second annual workshop — the first time our full team and board have ever been in the same room together. For two days, we celebrated a year of unprecedented growth, reviewed every program from research to livestreams to coloring pages, and set 47 ambitious milestones for 2026 (that’s nearly one every week!). We also mapped out thoughtful fundraising plans to protect our crowdfunded roots and help us grow sustainably. From SMART goals to dream stream guests to bold ideas about research transparency, the energy was contagious. It’s only possible because of you. Read more about the workshop in this thoughtful retrospective written by our very own Dr Mandy!

AI | Art | Intent

Now I’m no mathematician (we do have some of those on staff if you need them) but it looks like AI flattens the curve of creativity by bringing people who are not talented up, and dragging those who would normally take risks with their skills down.

When I brought this up to the team they said “You know what, you could write an article about that” so I did!

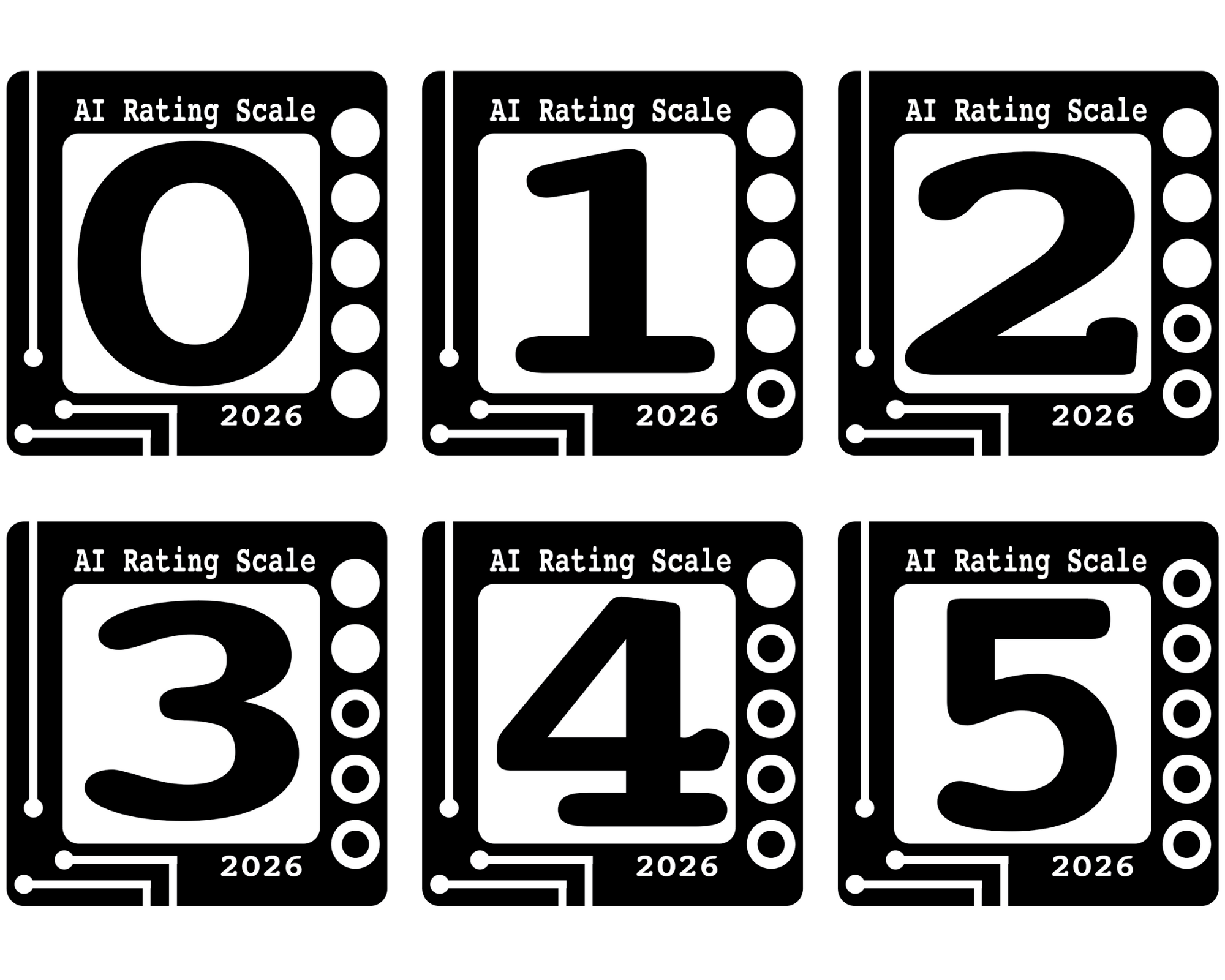

I got REALLY into it. Found sources and studies to support my hypothesis the AI is useful as a tool to a certain point, and that point, I discovered, lines up pretty neatly to Levels 2 and 3 on our REAL Rating.

Anyway, the long and the short of it is this: humans can do one very important thing that AI can’t.

And that is acting with intent.

Every word in this summary was chosen to convey a specific message; should that message fall flat, I’d have to explain myself and do better the next time. AI can’t offer an explanation, it’s just a fancy rock doing madlibs. What it can be is a useful tool and it is changing human creativity.

For better or for worse is yet to be determined.

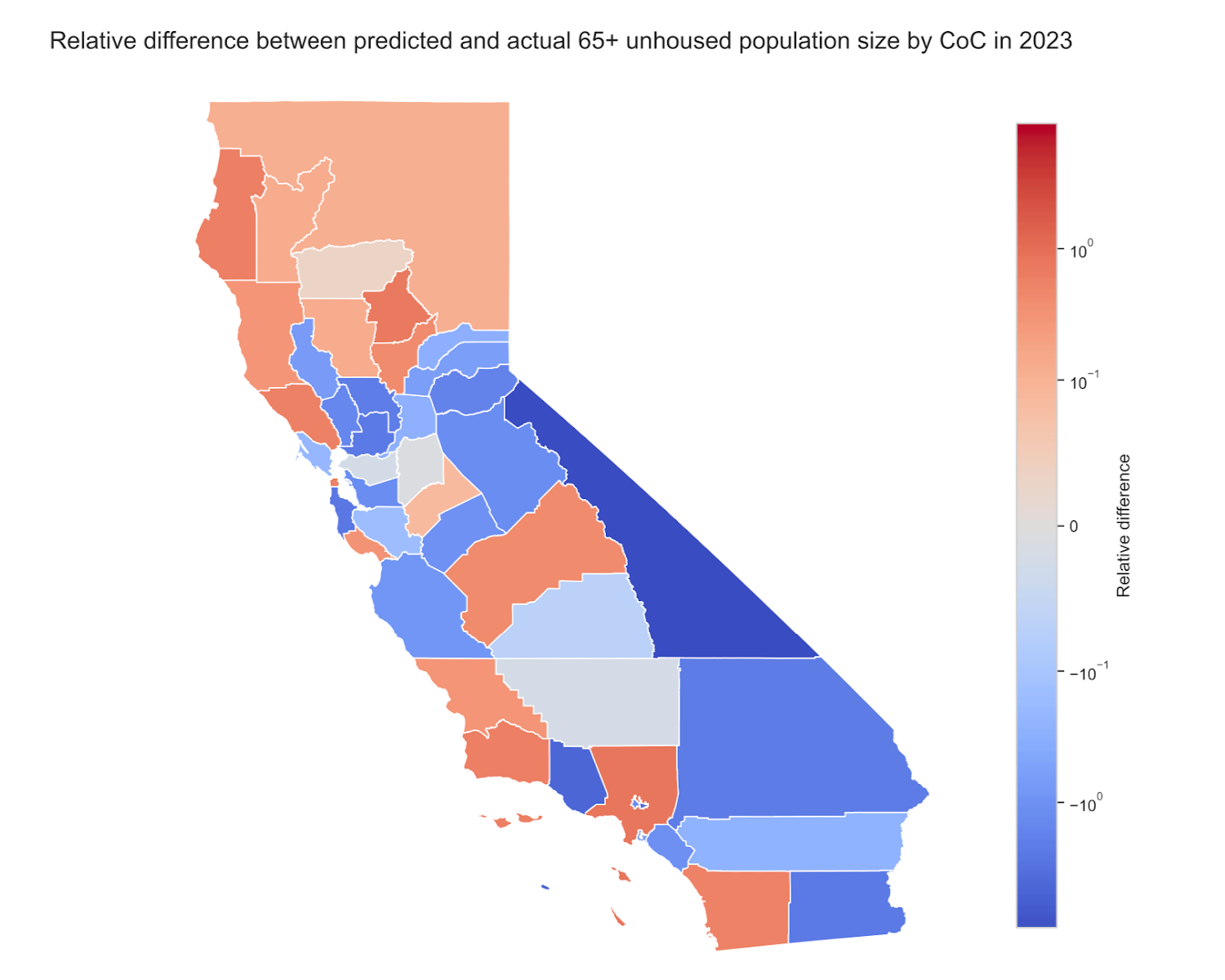

How effective are different parts of California at addressing homelessness?

It started when we partnered with the California Elder Justice Coalition, or CEJC, a nonprofit organization already advocating justice for the unhoused elderly. California only has 12% of the total US population, but 24% of the unhoused population. It is hard to know what to advocate for without knowing which programs are the most effective or even if they were making any changes at all! We set off to answer questions like: Where is the unhoused population larger or smaller than expected? Luckily the evidence is public data, we just needed to build the models to help interpret it.

Demystifying AI Usage

Have you heard about the AI usage rating system that our team has been working on? What if there was a way to tag things online with a rating on the level of AI used to create it?

On the Right Track: A Full STEAM Ahead Retrospective

Behind the Boiler: A ST(R)EAMing success? We sat down and broke down the good, the bad, and the ugly for our very first flagship fundraiser. Read about what we learned, and what we’re planning on changing for the future

Point Process Santa for Christmas

Santa Claus Point Process! Shout out to Santa for the cameo in our 4th and final coloring page of 2025. A gift from us to you created by the talented KALEIDOSOULS! Check it out!

Rumination 2025: Year One

Not your average nonprofit: A series of ruminations from the Real good team about their experiences in the first year of a brand new type of nonprofit. Mixing AJ’s perspective with input from the full team in a round table. READ ALL ABOUT IT!

New Year, New Real Good Resolutions!

Even nonprofits can have Resolutions! We have (at least) 4 of them! All of them are ambitious, but we’re so ready for 2026 you don’t even know dudes. Check them out.